Solutions

Published

16 May 2025

Written by Tim Weekes Senior Consultant

Tim trained as a journalist and wrote for professional B2B publications before joining TKO in 1998. In his time at TKO, Tim has worked in various client service roles, helping electronics companies to achieve success in PR, advertising, lead generation and digital marketing campaigns. He now supports clients with strategic messaging, the writing of technical and marketing promotional materials, and the creation of videos and podcasts. Tim has a BA (Hons) degree and a Diploma in Direct Marketing.

Why IoT power optimisation matters

Reducing power consumption is one of the most important requirements of an IoT (internet of things) product design. This is particularly the case if the product is battery-powered, and untethered from a mains or other type of wired power supply.

Even for mains-powered devices, power consumption can be an important consideration because of the need to comply with regulations governing stand-by power consumption, and because inefficient operation generates waste heat which needs to be dissipated safely without compromising the reliability of heat-sensitive components.

For battery-powered devices, however, the question of power consumption is even more salient. Reducing power consumption in battery-powered IoT devices offers the following benefits:

- Extend battery life – longer battery run-time reduces the frequency with which users have to recharge the battery of small devices such as smart watches and true wireless stereo earbuds, making usage more convenient and leading to greater user satisfaction.

- Save money – in industrial IoT products such as electronic shelf labels, replacing the primary (non-rechargeable) battery requires a technician to attend to the device. The cost of technician time adds greatly to the total cost of ownership of the product.

- Go green – battery disposal adds to the mountain of electronic waste that the world generates. Extending battery run-time reduces the number of batteries which need to be disposed of.

- Improve reliability – IoT power optimization could extend the intervals between battery replacements. Low-power design techniques can even enable a device to run on harvested energy backed by a battery or supercapacitor, promising never-ending power. Reducing or even eliminating the risk of downtime caused by a battery failure increases system reliability.

Low-power design techniques can also mean that the system generates less waste heat and runs cooler. Operation of electronic components at lower temperatures is correlated with longer component life and higher reliability.

This guide describes important elements of an IoT power system optimisation, including relevant aspects of hardware design, firmware implementation, and wireless communications protocols.

The reader will gain an understanding of valuable low-power design techniques that they can implement in IoT product designs.

Understanding power consumption in IoT devices

There are broadly three factors that impinge on battery life in IoT devices:

- The rate at which the components of the system consume power when they are running

- The energy capacity of the battery (which is a function of the battery’s size, and its chemistry)

- The duty cycle – the period over which the system is quiescent or in stand-by mode compared to the period during which it is active

Power-consuming components

While the typical consumer or industrial IoT device’s PCB contains hundreds of components, typically five or fewer are responsible for nearly all of the device’s power consumption. In general, the biggest consumers are high-speed digital devices: microcontrollers, microprocessors, graphics processing units (GPUs), FPGAs, and wireless transceivers/controllers.

In addition sensors, which can provide either an analogue or digital output, can consume significant amounts of power if operating continuously, or if they are configured to operate at a high output data rate.

Battery capacity

In many IoT devices, the energy capacity and therefore the size of the battery determines the size and weight of the entire product. Reducing IoT power consumption is often the best way for the system designer to reduce a product’s size if it enables the use of a smaller battery.

For many years, the nickel metal hydride (NiMH) battery chemistry was favoured in small wireless devices: NiMH batteries are robust and safe, and battery management and charging are easy to implement.

Today, IoT devices are most likely to feature a kind of lithium battery. Compared to NiMH, lithium provides much higher energy density: for any given energy capacity, a lithium battery will be much smaller than the equivalent NiMH unit.

Because of the huge volume of lithium batteries built into mobile phones, laptop computers and electric vehicles, their price dropped steeply through the 2010s and early 2020s, making their use much more economically attractive in IoT devices.

But lithium imposes extra burdens on the IoT product developer:

- A lithium battery requires a battery management system (BMS) which implements safety processes to prevent thermal runaway, a dangerous process which can cause a faulty lithium battery to catch fire or explode. The BMS should also implement functions such as cell balancing to ensure that each cell in a battery undergoes the same number of charge/discharge cycles, to maximise battery life.

- A lithium battery requires the implementation of a special constant current/constant voltage (CC/CV) charging control circuit to avoid premature ageing of the battery cells if exposed to excessive voltage when nearly fully charged.

System operating modes

The main controller or processor in a battery-powered IoT device normally consumes much more power when running at full speed than any other component in the system. One of the most important ways to reduce power consumption in IoT devices is therefore to shorten the time periods in which the MCU or MPU is active – an approach known as duty cycling. The aim is to accelerate processor operations so that the time between starting and finishing data processing is truncated: this has the effect of extending the period in which the MCU or MPU is in sleep or quiescent mode.

In sleep mode, an MCU’s or MPU’s power consumption is much lower than when it is active.

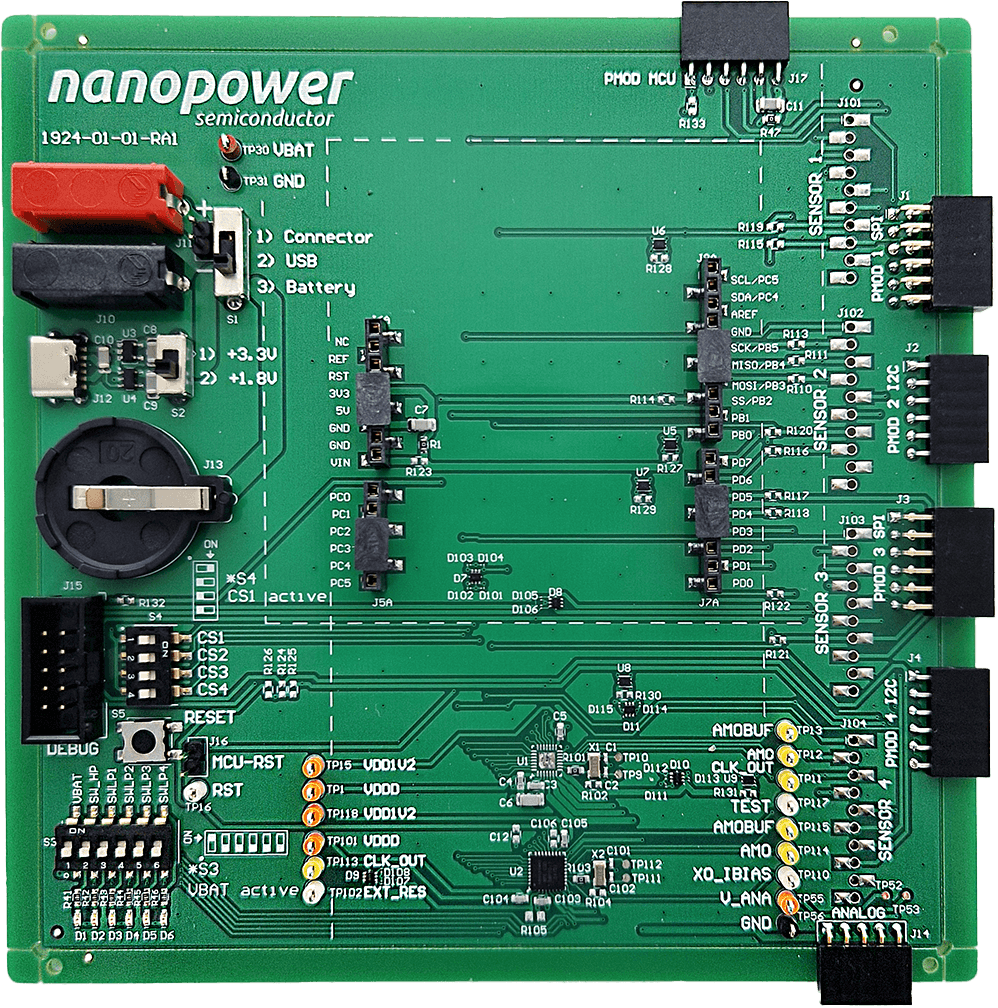

A related low-power design technique is to use a power-saving companion product such as the nPZero IC from Nanopower Semiconductor. In wireless IoT systems, the nPZero takes over control duties from a host MCU when the system’s sensors only require monitoring, and switches the power-hungry MCU off. In this idle mode, the nPZero typically reduces operating current to less than 100nA.

When an event occurs that requires complex data processing or wireless communication, the nPZero wakes up the host MCU. After the MCU has completed its operation, the nPZero takes back control and switches the MCU off. This approach can reduce typical IoT system power consumption by as much as 90%.

Hardware-level power optimization

One of the most important ways to reduce the power consumption of IoT devices is to limit the energy usage of the hardware components on the board. Four low-power design techniques contribute to the optimisation of an IoT power system.

Select low-power components

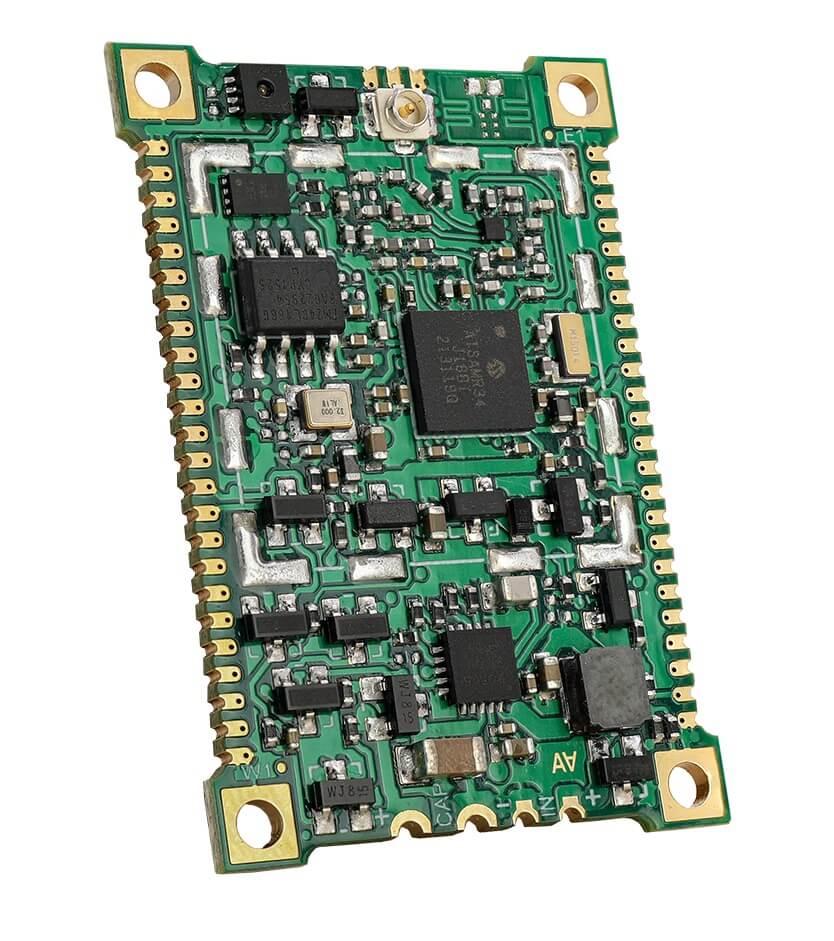

In response to strong demand from IoT device manufacturers, component suppliers have been steadily improving the low-power performance of the biggest energy users in digital circuits – the MCUs, MPUs, and FPGAs.

A search for ‘ultra-low power MCU’ shows that all the main manufacturers of MCUs include in their portfolio a series of products that boast special features for reducing power consumption in both active and quiescent modes. For instance, the STM32U031, an MCU from STMicroelectronics based on a 56MHz Arm® Cortex®-M0+ core, draws just 160nA in stand-by mode with the real-time clock running, and 52µA/MHz in active mode. It can wake up from a low-power stop mode in just 4µs.

In low- and mid-density FPGAs, the non-volatile memory basis of Microchip FPGAs produces substantially less leakage current than competing SRAM-based FPGAs, cutting power consumption by as much as 50%.

Similar low-power choices can be made for other high-energy component types, including sensors and RF transceivers.

Employ power gating

Power gating involves selectively shutting off the power supply to portions of a circuit that are not in active use. This directly reduces leakage current.

Power gating can be implemented in a highly granular fashion, applying to blocks as small as a logic gate. An easier implementation of power gating is coarse-grained, in which it is applied to larger functional blocks such as a CPU core. Retention registers or other techniques may be used to maintain important state information before a block is powered down.

In implementing power gating, it is essential for the system designer to take account of the latency between triggering a gated block to wake up, and the circuit becoming fully operational.

Take advantage of voltage and clock scaling capabilities

Power consumption is proportional to the square of voltage, so even small voltage reductions yield substantial power savings. Reducing the frequency (clock speed) of an MCU’s, MPU’s or SoC’s CPU core(s) also reduces its power consumption. This is reflected in the datasheet of devices such as MCUs, in which active mode operating current is expressed in units of µA/MHz.

This technique for reducing the voltage and frequency when a digital device’s workload is reduced is known as dynamic voltage and frequency scaling (DVFS). DVFS might be applied, for instance, when the radio SoC in a remote wireless sensor is performing periodic sensor polling only.

The pre-configured sleep, stop, deep sleep and shutdown modes available in devices such as MCUs and MPUs also use different voltage and frequency settings for different levels of activity and environmental conditions.

The effective implementation of DVFS may be enhanced by task-aware scheduling, in which the application is designed to group computationally intensive tasks, to minimise transitions between frequency/voltage states, and to schedule non-critical tasks during low-power periods.

Design board layout with a view to minimizing power consumption

The techniques above help to minimise power consumption at the level of individual components, but the layout of the board also has an effect on system power consumption.

Important considerations to take into account include:

- Optimising power plane design – use wide and short traces for power rails to minimise their resistance, and implement proper power and ground planes to reduce impedance. Board designs should consider splitting power planes between different voltage domains.

- Placing components strategically – place high-current components close to power sources to reduce power losses in board traces, and group components with similar voltage requirements.

- Support sleep mode operation – separate always-on blocks from circuits that can be put into a sleep or quiescent mode. The layout should also enable efficient power gating through proper isolation.

Firmware- and software-level power optimisation

The hardware choices described above do no more than provide the developer with the capability to optimise the power design of IoT devices: the potential to save power is only realised if the system’s firmware and software exploits the capabilities.

Developers writing an IoT device’s firmware can take advantage of the capabilities in various ways:

- Implement sleep modes and duty cycling

- Disable unused peripherals such as ADCs and communications interfaces before going into sleep mode

- Perform memory management, for instance by keeping volatile RAM powered only when necessary

- Use edge-triggered interrupts rather than level-sensitive where possible

- Group energy-intensive tasks to minimise the periods in which the system needs to be active, and process collected data in batches rather than individually

Developers should also take care when setting the triggers for interrupt-driven events, and extend the polling intervals for sensor monitoring as much as possible.

Real-time operating systems (RTOSes) such as FreeRTOS and Zephyr, if used, also offer features for power reduction. For instance, the Zephyr RTOS offers device runtime power management and system-managed device power management, which includes methods for configuring device drivers, file systems and other features to support sleep and shutdown capabilities.

Finally, software developers can fine-tune system power consumption during the testing and debugging of prototype designs.

When testing and profiling, the developer can:

- Measure actual power consumption in different states

- Profile wake-up and sleep transition times

- Identify and eliminate unintended wake-up events

And when debugging:

- Implement lightweight logging during development

- Consider the debug output’s power impact

- Use non-intrusive monitoring techniques

Optimising communication protocols and strategies for power reduction

A battery-powered IoT device’s link to the world can have a large effect on system power consumption. Developers who want to extend the battery life of IoT devices need to pay careful attention to the effect of their choice of radio protocol on power consumption.

Techniques discussed above, such as duty cycling, and extending sensor polling intervals, might already have reduced the amount of time during which a power-hungry radio is active. But active mode power consumption can also be controlled by making the right choice of protocol.

A Wi-Fi® network provides universal compatibility with internet access devices such as gateways, routers and smartphones. High data rates are another advantage of the Wi-Fi protocol, alongside relatively long range between nodes of up to 50m indoors. But a Wi-Fi transceiver is a heavy consumer of energy – it is intended for use by mains-powered devices and by portable devices such as smartphones that have a relatively large battery.

Wi-Fi networks support the universal Matter protocol for consumer and home automation devices.

For short-range connections, many IoT devices will find that a Bluetooth® Low Energy link is better than a Wi-Fi network because power consumption is much lower. Range is less than that of a Wi-Fi network, typically 10m indoors, but as the name suggests, the radio is designed to provide for low energy usage, while – like a Wi-Fi radio – supporting universal connectivity with smartphones and other types of consumer and home automation device.

An alternative to Bluetooth Low Energy networking is the Zigbee protocol, which is suitable for home automation and sensor monitoring applications. Power consumption of Zigbee equipment is low, and the protocol is widely supported by hardware providers. But Zigbee does not provide the universal compatibility with smartphones and other consumer devices offered by Wi-Fi and Bluetooth networks.

Thread is another low-power networking protocol for connecting smart home and IoT devices. It implements network operations according to the IEEE 802.15.4 standard, and is based on IPv6. It provides a robust and reliable mesh network for devices to communicate with each other and with the internet. Thread networking is designed for use by low-power devices. Like Wi-Fi networks, Thread supports the Matter interoperability protocol.

For long-range connections, a LoRaWAN® low-power wide-area network is ideal for fleets of IoT devices. Range between nodes is measured in kilometres, and radio transmissions consume little power. A LoRaWAN network can be set up and operated by the user, giving it control of network coverage and performance, and freeing it from the obligation to pay network access fees.

An alternative for long-range connections is NB-IoT, which runs on mobile phone network equipment. NB-IoT is a low-power, low data-rate version of 4G (LTE) networking. Fleet operators have to pay a network access fee to a network service provider, and equip devices with a SIM card for registering on the network.

The choice of network protocol strongly affects the ability of developers to reduce power consumption in IoT devices. Having chosen the appropriate radio technology, developers can implement various techniques to reduce its power usage, including:

- Transmission scheduling – a radio SoC uses much more energy when actively transmitting and receiving than when in a quiescent state. The configuration of the radio’s operation should be optimised for the application’s requirement for data throughput and energy use, so that the radio is active only for as long as necessary. This requires careful consideration of the optimal intervals between transmissions, and the application’s real requirement for data communication.

- Adaptive power control – a wireless SoC should provide a means to adjust the radio’s transmit power depending on the required signal strength. When transmitting to a nearby receiver, the transmit power can be lower than when transmitting to a distant receiver. Likewise, attenuation caused by masonry or metal surfaces can require the system to raise transmit power. Implementing adaptive power control allows transmit power to be reduced as much as possible without compromising signal integrity. At lower transmit power, the radio consumes less energy.

- Firmware configuration – efficient packet handling enables the system to minimise the number of radio transmissions, and avoid unnecessary radio wake-up events.

Advanced power management techniques

The techniques, strategies and component choices described above provide a guide to reducing power consumption in IoT systems.

There are, though, additional ways to extend battery life in IoT devices.

The conventional model for power optimisation of IoT devices is based on the assumption that the system has a fixed store of energy provided by a battery, and that the job of the designer is to eke out this energy store for as long as possible by drawing energy out of the battery as slowly as possible, using techniques such as power gating, duty cycling, and dynamic voltage and frequency scaling.

But what if the energy store could be continually replenished? This is the promise of energy harvesting, the process of drawing energy from freely available energy sources. The most commonly used energy sources are:

- Light, captured by photovoltaic (PV) cells. Ambient light can be sunlight or artificial light from indoor luminaires.

- Heat, captured by a thermoelectric generator (TEG).

- Motion – bursts of kinetic energy can be captured, for instance when a mechanical switch or button is pressed.

The architecture of a typical energy harvesting system consists of an energy source, an energy store (such as a supercapacitor or small battery, to keep the energy for when it is needed), the load or power consumer, and a power management IC which regulates the operation of the harvesting energy system.

Energy harvesting components such as PV cells and TEGs are today readily available in various sizes, power output ratings and sizes. Specialist PMICs for energy harvesting include the NEH2000BY, NEH7100BU and NEH7110BU from Nexperia.

There are other ways for IoT device designers to extend battery life without reducing power consumption. Every battery has a nominal specified energy capacity, normally expressed in Ah or mAh. In practice, however, the actual amount of energy that the battery can supply before becoming functionally discharged might be considerably less than the nominal value, for instance because when charged the battery fails to reach its nominal value.

A battery management system is a circuit which maximises the amount of energy actually available to the application. Battery management can be implemented with dedicated battery management ICs supplied by manufacturers such as Texas Instruments or Renesas. Battery management will provide functions such as cell balancing, to ensure that a lithium battery equalises the voltage across all cells when charging, to maximise its fully charged capacity.

A battery management system can also boost the output voltage of the battery towards the end of the discharge cycle, to maintain the voltage above the minimum threshold that the load circuit can handle, and so avoid the battery reaching the end of its discharge cycle prematurely.

Developers can also implement dynamic power management to match the battery’s power output to the real needs of the application. Just like the power mode settings provided to users of a PC, an IoT device can adopt dynamic power mode settings which enable the system to switch between a performance-optimised and power-optimised orientation, depending on the current requirements of the application.

Conclusion

The guidance provided in this article show that efforts to reduce power consumption in IoT devices must be combined in a holistic strategy, which combines design approaches to hardware, firmware, wireless communication, and battery management.

In recent years, and in response to market demand as well as government regulation, component manufacturers have made great strides in reducing the active and quiescent mode power consumption of the main energy-using devices such as MCUs, MPUs, SoCs, GPUs and FPGAs.

This trend can be expected to continue as semiconductor process technology advances, creating structures and materials which operate more efficiently and which enable electronic functions to be performed faster, so that the system can spend longer in quiescent mode. This promises a future in which IoT devices can operate for longer between battery replacements, or can use smaller batteries, helping to reduce system cost and size, and shrinking the environmental footprint of tomorrow’s IoT.

Electronics design engineers can always find news of the latest low-power components here, and explore their potential with new and exciting development boards.

And subscribe to www.youtube.com/@ipXchange to make sure you are notified of new videos on the platform.

You must be signed in to post a comment.

Comments

No comments yet