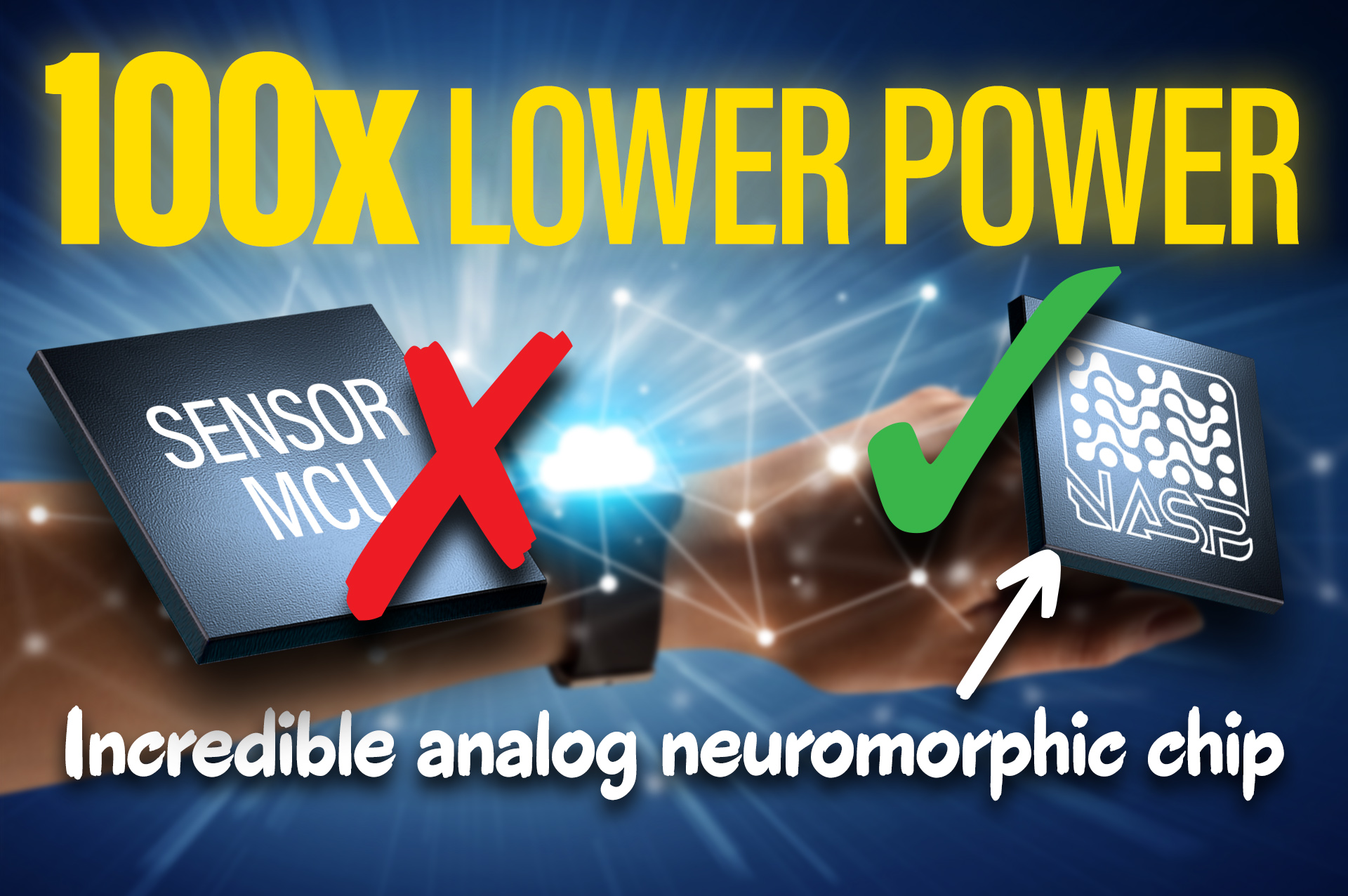

POLYN is flipping edge AI design on its head. Instead of running ML models on a traditional processor or microcontroller, the POLYN analog neural chip directly implements the network in silicon. No CPU. No memory fetches. No clock. Just microwatts and microseconds from input to inference.

This chip is built using POLYN’s NASP technology—Neuromorphic Analog Signal Processing—which takes a trained neural network and physically maps it to a circuit of opamps and resistors. That model is compiled into silicon using POLYN’s proprietary toolchain, and the result is a static, asynchronous neural network that delivers instant results from sensor to output.

Ultra-low power, ultra-fast inference

The first commercial POLYN analog neural chip is a voice activity detection (VAD) solution that consumes just 34 µW and completes inference in under 30 µs. It’s already being evaluated in battery-powered IoT applications, wearables, and medical monitoring systems where energy and latency are critical design constraints.

POLYN provides a full evaluation workflow for engineers, including digital twins, hardware samples, and an SDK. You can simulate your AI model on POLYN’s platform before committing to tapeout, streamlining the development process for sensor-focused edge AI systems.

A new hardware model for embedded AI

Unlike traditional AI/ML accelerators that are general-purpose, POLYN’s chips are application-specific. You bring your trained model, and POLYN works with you to compile it into a custom analog layout. This approach means zero overhead from compute infrastructure, making it ideal for ultra-compact, real-time edge systems.

Engineers working in industrial sensing, gesture recognition, and medical wearables now have a way to run always-on AI without draining the battery. This is also a key enabler for low-power or disposable devices, where extending battery life by weeks or months can define the entire product category.

Comments are closed.

Comments

No comments yet