Solutions

Published

17 August 2025

Written by Tim Weekes Senior Consultant

Tim trained as a journalist and wrote for professional B2B publications before joining TKO in 1998. In his time at TKO, Tim has worked in various client service roles, helping electronics companies to achieve success in PR, advertising, lead generation and digital marketing campaigns. He now supports clients with strategic messaging, the writing of technical and marketing promotional materials, and the creation of videos and podcasts. Tim has a BA (Hons) degree and a Diploma in Direct Marketing.

Why real-time decision-making matters in embedded AI

Real-time operation, deterministic operation, low-latency decision-making: these have been the stock-in-trade of embedded systems developers for decades.

Engineers need a way for complex software-driven systems to guarantee that decisions can be taken and operations performed within time windows measured in milliseconds or microseconds: hardware such as microcontrollers (MCUs) and FPGAs, real-time operating system (RTOS) software, and techniques such as priority-based pre-emptive scheduling have provided the answers to the problem in traditional logic-based systems. They allow for deterministic operation in every type of embedded system, from autonomous navigation in missiles and avionics in jet fighters to safety-critical process control in industrial environments to electronic control units for functions such as braking or engine control in vehicles.

The logical nature of control software is conducive to real-time decision-making: a known set of if/then and other logical operations can readily be bounded within a specified time window. This allows the developer to set guaranteed real-time response times in traditional embedded control applications.

The adoption of AI technology poses a significant challenge to developers of real-time embedded systems, because of the probabilistic nature of AI reasoning. In logical reasoning, answers to questions are binary; in AI, an inference is expressed to the user in binary terms, but the reasoning is actually based on a calculation of probability. The probability is calculated by reference to inputs, weights and parameters: the number of operations or calculations required to generate an inference can vary from task to task. In the simple example of an autonomous driving (AD) car recognizing that an image captured by its vision camera is a pedestrian, the number of operations required to generate an inference will vary depending on how well defined the image of the pedestrian is, how much visual noise obscures the image, and how closely the image matches the algorithm’s model of what a pedestrian looks like.

This means that inferencing times can vary from scenario to scenario. This is in marked contrast to traditional control logic, in which, given a similar set of operating conditions, the time to take a decision will always be the same.

Yet in edge AI systems as much as in traditional control systems, real-time decision-making is required for operational effectiveness and to maintain safety. The problem of real-time edge AI inference is equally acute in military and civilian drones, in factory automation, and in AD vehicles and driver assistance systems.

In these types of systems, it is often not enough for AI inference to be accurate: it must also be fast.

This article explores the challenge of real-time AI decision-making, and explains the main hardware and software factors which contribute to a successful embedded real-time AI system design.

Understanding real-time constraints in embedded systems

The requirements of real-time AI decision-making differ from product to product and application to application. In fact, there are different categories of real-time operation.

Hard real-time systems have the strictest constraints: a missed deadline is considered a catastrophic failure. For example, an aircraft’s flight control system must always respond on time to maintain safe operation.

In a firm real-time system, a missed deadline is undesirable but does not cause total system failure. The result of a delayed task is simply discarded, as is the case with a packet transmission in a network switch.

Soft real-time systems are the most forgiving. A missed deadline is acceptable: the effect is only to degrade performance or quality, observed for instance in a video stream briefly lagging.

Deterministic behavior and guaranteed latency

An application’s requirement for hard, firm or soft real-time operation affects whether the system will be designed to produce deterministic behavior.

In an embedded system, deterministic behavior means that its response to an event is always predictable and repeatable, given the same initial conditions. What can be predicted is not only the correct output, but also the timing of that output.

Timing guarantees are the core of deterministic behavior. They ensure that a task will be completed within a specified, maximum time frame – in other words, with guaranteed latency. The predictability of the timing is what distinguishes a deterministic system from a non-deterministic one, where task completion times can vary unpredictably.

Task scheduling for deterministic behavior

An RTOS is able to arrange the performance of operations within a specified time frame by applying a scheduling technique. The three most commonly deployed are:

- Pre-emptive scheduling – ensures determinism by strictly prioritizing tasks: a higher-priority task will always interrupt a lower-priority one.

- Co-operative scheduling – achieves determinism by having tasks voluntarily yield the CPU at predictable points in time. This method avoids the unpredictability of pre-emption, but requires careful design to prevent a long-running task from blocking others.

- Rate-monotonic scheduling – a static-priority pre-emptive algorithm. It assigns higher priorities to tasks with shorter periods. This deterministic method ensures that periodic tasks with the most frequent deadlines are scheduled first.

Hardware considerations for edge AI real-time inference

The faster the hardware platform on which an edge algorithm is to run, the easier it will be to operate within real-time constraints.

The raw throughput of an edge AI system is normally measured in GOPS or TOPS: giga-operations per second, or tera-operations per second. This performance specification on its own provides no guarantee about the time taken to perform any given AI inference, but in general, the higher the GOPS or TOPS rating, the faster the device will perform any given inference task.

Engineers will find dramatic differences in the AI throughput rating of different types of processor. AI inference is an inherently parallel process. The CPU in a microcontroller or microprocessor is optimized for serial operations, whereas a neural processing unit (NPU), graphics processing unit (GPU) or FPGA is architected for parallel processing. This means that NPUs, GPUs and FPGAs tend to offer much higher AI inference performance than MCUs or application processors do.

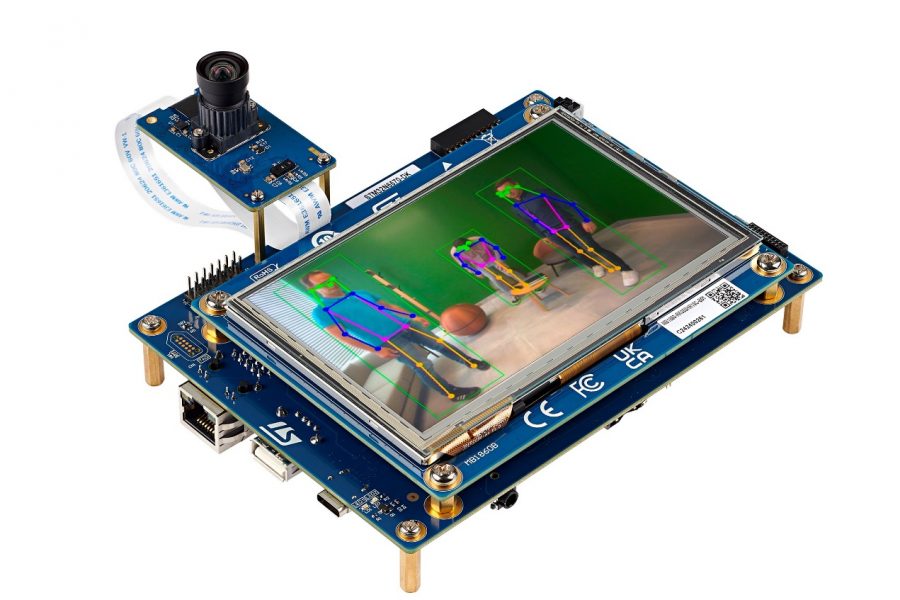

While NPUs are available as discrete AI hardware accelerators, a common trend is for MCU and application processor manufacturers to integrate NPU IP, such as the Arm® Ethos™ family, into their devices. This enables embedded system developers to benefit from the control logic, system management and analog interface capabilities of an MCU or application processor alongside high-performance AI inferencing in a single compact chip.

Manufacturers such as STMicroelectronics with its STM32 line of MCUs, NXP Semiconductors with its i.MX family of application processors, and Alif Semiconductor with its Ensemble family of MCUs and fusion processors have all adopted this strategy. These products offer the promise of real-time AI inferencing enabled by the on-chip NPU with real-time control functionality.

Taking account of memory and power

Low-latency embedded AI is not just a matter of AI processor speed. To make use of the raw speed of a hardware AI accelerator, the inference system needs to be structured to serve up input data without interruption: this requires sufficient fast internal memory, tightly coupled with the processing system via a high-speed interface, potentially supported by external memory also connected via a fast interface.

Since many edge AI applications perform inferencing on video, audio or motion data, the MCU, application processor, FPGA or NPU also requires support from dedicated hardware (such as an image signal processor or a digital signal processor, or DSP) to pre-process input data at a fast rate from image sensors, microphones, inertial measurement units (IMUs) and other sensors, to make it suitable for handling by the AI accelerator.

Hardware which is capable of fast edge AI inference not only helps developers achieve their goals for real-time operation, it also helps to save power by completing inference tasks faster, enabling the host system to spend longer periods in energy-saving low-power modes.

Designing AI models for low-latency inference

After hardware selection, the developer’s next task is to optimize the edge AI model itself for low latency and low power.

In general, the latency of a neural network inference is largely determined by the number of sequential operations – such as multiplications and additions – that a processor must complete. Each layer in a network requires a series of these computations. A shallow neural network has fewer layers, meaning there are far fewer operations to perform. This directly reduces the time it takes for the model to process an input and produce an output. In contrast, a deep neural network can have dozens or even hundreds of layers: this requires substantially more computation and memory, leading to higher latency.

A key technique to consider when optimizing an edge AI model is quantization, which reduces the model’s precision (typically from 32-bit floating-point to 8-bit integer): this shrinks its size – the memory footprint occupied by the code – and reduces the amount of compute power required to perform inference, without a substantial loss of accuracy. This enables faster execution.

Additionally, model pruning can be used to remove unnecessary weights and connections (neurons) in the network, reducing the overall number of calculations required for a single inference.

Activation functions, especially complex ones such as sigmoid or tanh, introduce non-linear calculations which are computationally intensive for an edge device’s processor. These operations require more clock cycles than simple additions or multiplications. To speed up inference, developers often replace activation functions with simpler, faster alternatives such as ReLU (rectified linear unit).

Building deterministic time frames into AI algorithm design

Finally, developers can deploy methods to build compliance with deterministic timings into the model.

Deadline-aware optimization frameworks can employ a number of strategies to achieve this, including:

- Adaptive resource allocation: dynamically allocating computational resources (such as an NPU core or GPU) to a neural network task to ensure it finishes within its deadline. If a task has a tight deadline, it may receive more resources, while a less critical task might be allocated fewer.

- Model-specific adaptation: the optimization framework may explore various model architectures and compression techniques to find the configuration that provides the best accuracy while still meeting the deadline. This often involves a trade-off in which some accuracy might be sacrificed for low latency.

- Application-level feedback: These systems can use a feedback loop from the application to understand the impact of a model’s performance on the overall system’s quality of service. For example, a system might adjust the model’s complexity in real-time to maintain a desired level of accuracy while staying within the timing budget.

Scheduling and prioritization of AI tasks

The implementation of real-time decision-making in complex embedded AI systems depends on the effective categorization of events and processes on a scale from high-priority – for those with very low tolerance for latency – to low-priority, if they can be performed on a best-effort basis.

An RTOS provides an ideal environment for prioritization: one of the core functions of an RTOS is to support multi-tasking, and RTOSs have for decades been used to perform hard real-time scheduling of control logic in highly demanding and mission-critical applications such as avionics, industrial process control and robotics.

When integrating AI inferencing into a real-time schedule, however, prioritization becomes more difficult, because AI inferences may be of variable duration.

Use of a pre-emptive priority-based scheduler allows control tasks to maintain the highest priority, ensuring that hard real-time deadlines for mission-critical operations are never missed. In this type of scheduling scheme, AI inference is a lower-priority task which can be pre-empted when control operations need to be executed.

Partitioning inference workloads into smaller, interruptible chunks reduces the period during which inferencing blocks the operation of other time-critical operations. Alternatively, developers may implement temporal isolation, reserving dedicated time slots for AI processing during periods when the system is idle, or between control cycles. To maintain timing discipline in edge AI inferencing, the application of worst-case execution time (WCET) analysis enables the developer to guarantee AI operation within real-time constraints, while accounting for the characteristic cache effects and memory access patterns in AI computations.

If the system requires concurrent execution of AI and control operations within tight timing constraints, a dual-core architecture enables the allocation of a dedicated core to each domain, eliminating the risk that conflict for hardware resources causes an operation to exceed its time window.

Pipelining and buffering for throughput optimization

The objective of prioritization and scheduling is to make the best use of the available hardware resources, given the competing calls on those resources from different operations, such as control logic and edge AI inference.

Developers can also take steps to reduce the risk of conflict for resources between different categories of tasks by improving the efficiency with which tasks use the available hardware.

A valuable technique for achieving efficiency gains in edge AI inference is data pipelining: this substantially reduces AI inference latency by overlapping computation and memory operations. Instead of processing data sequentially, pipelining breaks inference into parallel stages – data pre-processing, neural network layers, and post-processing – which execute concurrently on different batches of data.

This approach makes the most efficient use of the available hardware resources by keeping processing units continuously active, ensuring that one stage processes current data while another loads the next batch. Bottlenecks in memory bandwidth are minimized through pre-fetching and buffering techniques.

Data pipelining does need to be implemented with caution, however: the deeper the pipeline, the more efficiently data can be processed through the available hardware, but the higher the risk of higher initial latency. The developer can reduce the risk of pipeline stalls through the use of double-buffering: maintaining two data buffers per stage, one actively processing while the other loads new data or outputs results. This ensures continuous data flow without waiting for memory operations.

Ring buffers extend this concept to multi-stage pipelines, creating circular buffer arrays in which producers and consumers of data operate on different buffer segments simultaneously. Multiple pipeline stages can read/write concurrently without blocking, thus maximizing throughput.

Validation and testing of real-time AI systems

In designing a system to support real-time AI decision-making, developers need a way to determine whether the strategies that they have deployed, such as pre-emptive scheduling and data pipelining, result in performance of AI and control operations within real-time constraints.

Multi-layered profiling provides an effective means to analyze end-to-end latency. At the hardware level, performance counters provide precise measurement of CPU cycles, cache misses, and memory access patterns for both AI inference and control operations.

Timestamp-based tracing provides a measure of real-time performance at critical points in the system, such as sensor inputs, pre-processing, inference execution, processing of control algorithms, and actuator outputs. High-resolution timers or dedicated timing hardware can trace system timings with microsecond-level precision without introducing measurement overhead.

In addition, developers can use static timing analysis tools to help determine worst-case execution times for the prediction of deterministic behavior. This can be combined with dynamic profiling under realistic workloads to detect timing variations and to pinpoint bottlenecks.

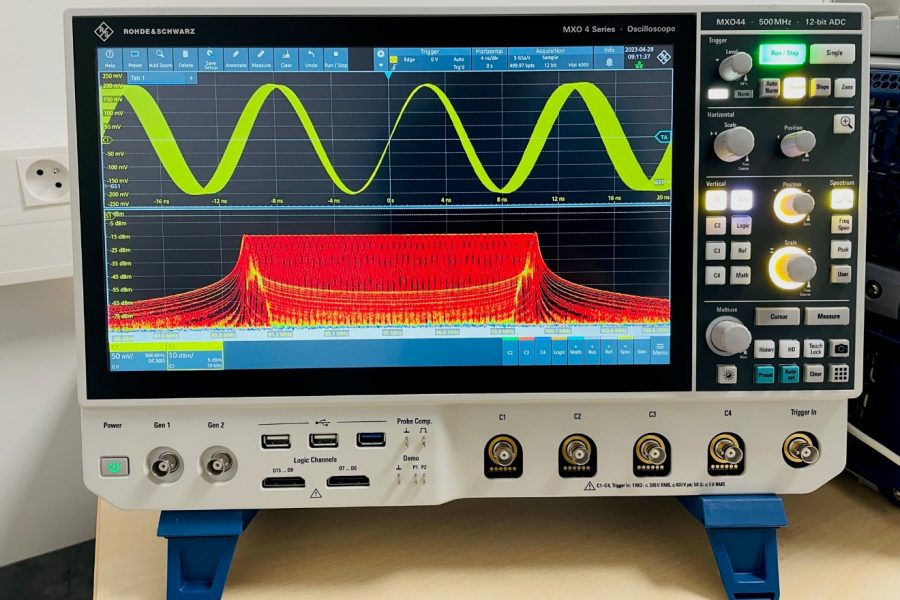

Logic analyzers and oscilloscopes enable hardware-level validation of timing constraints, particularly for interrupt response and I/O operations. Real-time trace buffers capture execution flows without disrupting system timing.

Statistical analysis of timing distributions reveals patterns in the variability of latency. Percentile-based metrics provide a better insight into tail latencies – latency events which occur infrequently – that could violate real-time constraints than averages do.

By correlating performance data with system states, the developer can detect timing dependencies and optimize scheduling decisions.

Developers might also consider hardware-in-the-loop testing, which allows systematic evaluation of AI performance across the full operating envelope: varying environmental conditions, sensor noise levels, and system states that would be difficult to reproduce consistently in field testing. This is particularly valuable for safety-critical applications in which AI inference timing must be validated in the worst case.

The controlled environment of hardware-in-the-loop testing enables precise measurement of end-to-end system response times, from sensor input through AI processing to control output, while maintaining the complexity and timing characteristics of real-world operation.

Future of real-time embedded AI

The technology of AI evolves at an extraordinary pace, and new features and frameworks constantly bring new opportunities for embedded system manufacturers to introduce new AI capabilities into their products.

For low-latency embedded AI systems, improvements in the support for AI in RTOS products provide a particularly fruitful area of study. In modern RTOS platforms, specialized hardware accelerators have become an effective tool for improving performance and energy efficiency – RTOS kernels now provide dedicated APIs and drivers for NPUs and AI accelerators.

New scheduling frameworks incorporate AI-aware task management, allowing RTOSs to handle inference workloads alongside traditional control tasks with predictable timing guarantees.

Emerging features include memory management optimized for AI models, with support for model compression and quantization directly within the kernel. On-the-fly model changes, a feature of an RTOS’s adaptability, introduce a dynamic dimension, enabling systems to update AI models during runtime without compromising real-time constraints.

Advanced RTOS implementations now also offer integrated profiling tools for analysis of AI inference timing and resource allocation mechanisms which balance compute resources between AI processing and critical control functions while maintaining deterministic behavior.

Rise of neuromorphic processors

Innovation is coming to the fore in hardware as much as in software. Edge AI systems to date have been based on familiar hardware architectures – largely Arm Cortex®-M-based MCUs and SoCs, and Arm Cortex-A or x86-based application processors, supplemented by on-chip hardware acceleration for neural networking. Some argue, however, that a form of digital processing implemented through software compiled to an instruction set is an inefficient way of handling AI algorithms.

A new generation of start-up companies such as Blumind and Applied Brain Research is developing neuromorphic processors – hardware devices which, rather than adopting a traditional von Neumann architecture, instead mimic in silicon the structure of the brain’s neurons and synapses. This means that input data can be processed in a natively neural way, rather than converting neural networking inputs to a digital data structure and processed in software. The makers of neuromorphic processors claim that their devices are able to perform certain kinds of edge AI applications tens or hundreds of times faster than traditional von Neumann-based devices, and with power consumption which is orders of magnitude lower.

Industry collaboration promotes edge AI adoption

It is not just individual companies which are working to improve the operation of AI at the edge. Industry is collaborating to create standards which promote interoperability and accelerate system development. For instance, Open Neural Network Exchange (ONNX) is a standard interoperability library: many embedded software distributions support this standard, allowing framework selection without compatibility concerns.

OpenVINO serves as an open-source toolkit for optimizing and deploying deep learning models from the cloud to the edge, accelerating inference across various use cases with models from popular frameworks such as PyTorch, TensorFlow and ONNX.

For evaluating the performance of new embedded AI product designs, the MLPerf benchmarks, developed by MLCommons, provide unbiased evaluations of training and inference performance for hardware, software and services. MLPerf enables manufacturers to compare performance objectively.

Conclusion: making real-time AI a practicable option for all

The understanding and practice in the field of real-time AI decision-making are advancing rapidly and in many directions. The key strategies for developing a low-latency embedded AI system, however, will remain constant: careful selection of the hardware device on which AI algorithms are to run, priority-aware scheduling of real-time AI and control tasks, and end-to-end measurement of AI performance to evaluate whether it fits within real-time constraints.

In an emerging and fast-changing arena such as AI, it can be helpful for embedded system developers to call on specialist knowledge, and AI experts can contribute insights into the challenges that are new to engineers who have grown up in the world of control logic. These include the methods for creating training datasets, the development of AI models, and the optimization of models for constrained hardware environments.

And one other source of help is of great value: the ipXchange library of news articles, webinars and videos on the latest components for edge AI such as the DeepX hardware AI accelerator or the Blumind neuromorphic processor.

You must be signed in to post a comment.

Comments

No comments yet