Solutions

Published

9 June 2025

Written by Tim Weekes Senior Consultant

Tim trained as a journalist and wrote for professional B2B publications before joining TKO in 1998. In his time at TKO, Tim has worked in various client service roles, helping electronics companies to achieve success in PR, advertising, lead generation and digital marketing campaigns. He now supports clients with strategic messaging, the writing of technical and marketing promotional materials, and the creation of videos and podcasts. Tim has a BA (Hons) degree and a Diploma in Direct Marketing.

Why sensor fusion implementations need to be optimised

Like people, electronics devices depend on their senses to understand and interact with the world around them. This applies to traditional electronics systems, such as marine navigation systems, industrial control, or home appliances as much as it does to the latest autonomous systems, such as robots, drones, or autonomous vehicles. And just like humans, using our eyes, ears, nose and skin in combination to sense the world, electronics devices can form the most accurate and complete picture of the environment when they combine multiple sensor inputs.

In the past, embedded sensor fusion had limited application. Strangely, this was not because of a lack of sensor functionality: it was because the processing capability to run complex sensor fusion algorithms was not yet cheap and readily available in the microcontrollers and other processor-based devices on which most embedded devices are based.

By the 2020s, this limitation had been eliminated: low-cost 32-bit MCUs, many based on the Arm® Cortex®-M architecture, have ample processor power to run sensor fusion algorithms today.

So what is embedded sensor fusion, and why is it important to optimise the operation of these algorithms?

Sensor fusion is the system for combining the outputs from multiple sensors to provide a better reading of the environment than any one sensor could achieve on its own.

There are three ways in which sensor fusion achieves this effect.

Optimising sensor performance: in some sensor fusion implementations, the output of one sensor can be used to calibrate or correct the output of another. For instance, in a multiple degrees-of-freedom motion sensing system built with MEMS inertial measurement units (IMUs), the gyroscope will suffer from a certain amount of drift over time caused by the effect of gravity. Outputs from the system’s accelerometers – which measure acceleration relative to gravity – can be used to compensate for this gravity effect, dramatically reducing the amount of drift in the gyroscope’s output.

Here, then, a sensor fusion algorithm has the effect of producing more accurate measurements at the system level.

Generating a more useful picture: a multi-sensor set-up containing sensors with different and complementary strengths and weaknesses can provide a richer and more nuanced output. For instance, in an Advanced Driver Assistance System (ADAS) or autonomous vehicle, a combination of radar, LiDAR and stereo vision cameras may be used: LiDAR provides a detailed depth map, while radar provides lower-resolution 3D information but good measurement of the velocity and direction of movement of objects, while vision cameras enable precise identification of objects such as pedestrians and bicycles.

Likewise, in a wearable device such as a smart watch or wristband, the combination of accelerometers, gyroscopes, heart rate/blood pressure monitor and temperature sensor enables the device to give more context-aware feedback to the wearer than any one sensor on its own can provide. For instance, an unusually elevated heart rate and blood pressure detected alongside sustained movement detected by the motion sensors indicates a healthy effect of exercise.

The same heart rate and blood pressure readings detected when the wearer is still could be an indication of heart disease.

Maintaining sensor system reliability: in the vehicle system above, sensor fusion algorithms enable the system to maintain safe operation in all conditions. For instance, rain, snow or fog can impair or halt the operation of LiDAR and vision cameras, but do not affect radar, enabling the navigation system to continue to perform object avoidance, even with a reduced picture of the environment around the vehicle.

In all these cases, the algorithm which fuses the inputs from multiple sensors needs to be optimised to maintain reliability and ensure the user has full confidence in the system.

Key challenges in multi-sensor fusion

The extent of the optimisation of a sensor fusion algorithm is always dependent on the hardware platform on which it runs and the requirements of the application in which the sensors operate.

Respecting compute system limitations

For example, a drone using multiple IMUs and a global navigation satellite systems (GNSS) positioning receiver requires accurate measurements of position, heading and attitude at all times, in order to maintain thrust and to navigate correctly. But the system is constrained in its processor throughput – it supports other workloads in addition to the navigation and stabilisation functions.

The higher the output data rate of the sensor fusion algorithm, the more precisely the drone’s motion can be controlled, but the higher the burden on the processor and its associated RAM memory resources, requiring more energy, and potentially crowding out other processing functions.

So an optimised sensor fusion algorithm limits the output data rate to the minimum needed to maintain safe motion and accurate navigation, while leaving as much processing bandwidth available for other functions as possible.

Managing processor system constraints

In general, implementing multi-sensor algorithm tuning procedures requires iterative optimization and parameter adjustment. System architects must balance the desire to extend the performance of the sensor fusion algorithm with the constraints of an embedded device’s compute resource: this often necessitates simplification of the algorithm, or adding hardware acceleration in the form of a dedicated digital signal processor (DSP) or FPGA co-processor.

The impact of hardware constraints is particularly acute in systems that require real-time responses. Multi-sensor algorithm tuning requires careful consideration of interrupt handling, task scheduling, and data buffering strategies. Engineers must account for sensor sampling rates, processing latencies, and communication overhead when designing systems which can reliably fuse multiple data streams without dropping samples or introducing unacceptable delays.

Maintaining low power consumption in battery-powered systems

Sensor fusion algorithms that continuously process multiple high-speed data streams can quickly drain a battery power supply if not properly optimised, especially in products such as wearable devices that contain only a small battery.

This means that embedded sensor fusion implementations must often use techniques such as:

- Adaptive sampling

- Sensor duty cycling and intelligent wake-up mechanisms

This requires close collaboration between hardware and software teams to implement power-aware sensor fusion algorithms which can dynamically adjust their computational intensity based on the available power and the requirements of the application.

Synchronising sensor outputs across different interfaces and sampling rates

The different protocols and physical interfaces used to route sensor outputs to the processor on which a sensor fusion algorithm runs affect the way that the system can be optimised.

For instance, one sensor in a multi-sensor set-up might use an I2C interface, while another might use SPI, and a third, UART – with each interface supporting a different output data rate. Different sensors might also operate at varying sampling rates, and suffer from clock drift.

This can make synchronisation of sensor data difficult. Successful approaches to synchronisation can include:

- Hardware-based timestamping

- Dedicated sensor interface controllers

- Sensor-specific interrupt structures

- Dedicated timing controllers or GPS-disciplined oscillators

Compensating for sensor data errors

Embedded sensor fusion systems must robustly handle missing data, a phenomenon normally attributable to communication time-outs, sensor malfunctions, or interference.

The challenge for system architects lies in implementing data interpolation and extrapolation strategies which maintain fusion accuracy while operating within embedded resource constraints. Simple linear interpolation may be sufficient for slowly varying signals such as ambient temperature measurements, but more sophisticated prediction algorithms are necessary for dynamic systems in which missing data could be significant for the application.

Embedded sensor fusion implementations often employ temporal buffering and predictive models to estimate missing sensor values. This approach, however, must carefully balance prediction accuracy with memory usage and computational overhead.

The challenge for the design engineer in multi-sensor algorithm tuning is to judge the best buffer depths and prediction horizons to provide for adequate fault tolerance without overwhelming the processor and its associated memory resources.

The types of sensor fusion algorithm, and when to use them

The main types of sensor fusion algorithm used in embedded systems give the design engineer options to optimise for simplicity, performance, resource usage and speed.

Complementary filters offer the simplest approach to embedded sensor fusion, combining high-frequency data from one sensor with low-frequency data from another through basic filtering operations.

These algorithms require minimal computel resources, and prioritise efficiency over sophistication. Multi-sensor algorithm tuning typically requires the design engineer to adjust filter coefficients to balance noise rejection against response time.

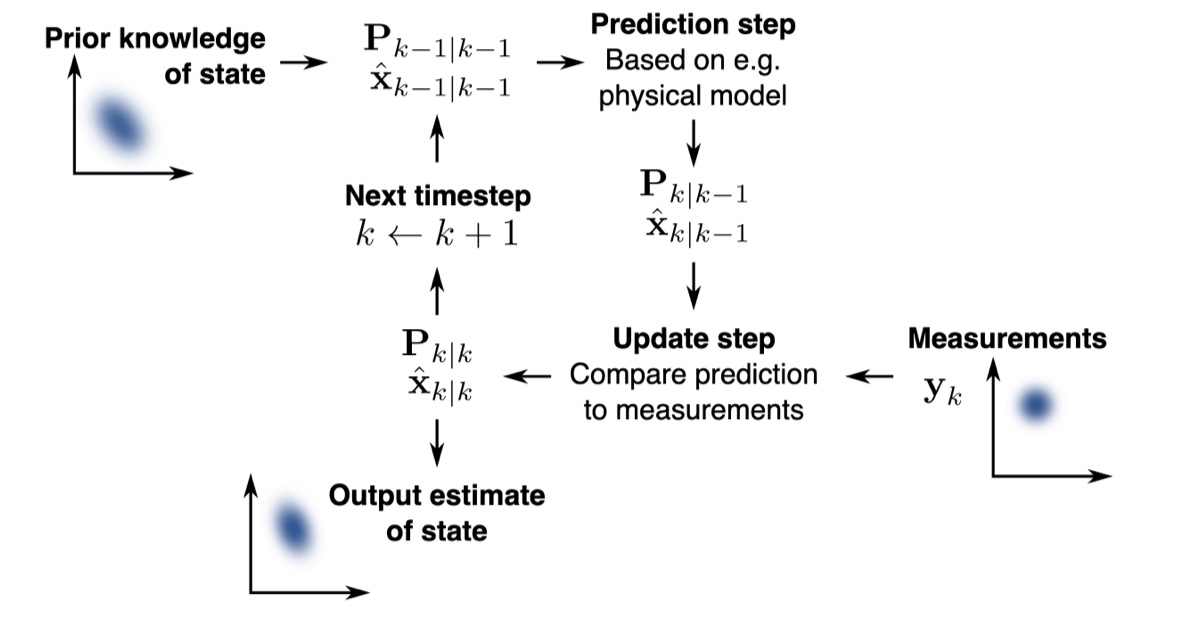

Kalman filters provide optimal linear estimation for embedded sensor fusion applications with well-defined system models and Gaussian noise characteristics.

The recursive nature of Kalman filtering enables efficient implementation in embedded systems. Even so, a Kalman filter’s matrix operations create the risk of overwhelming the compute capability of more limited embedded processors. Sensor fusion optimisation using Kalman filters requires careful tuning of process and measurement noise co-variances.

Extended Kalman filters (EKFs) extend linear Kalman filtering to non-linear systems through linearisation techniques. While computationally more demanding than standard Kalman filters, EKFs enable sophisticated sensor fusion algorithms in applications requiring non-linear state estimation. Embedded implementations often employ simplified Jacobian calculations or pre-computed linearisation tables to reduce computational overhead when performing multi-sensor algorithm tuning.

Particle filters offer robust performance in highly non-linear or non-Gaussian embedded sensor fusion applications, employing Monte Carlo sampling techniques. The computational intensity of particle filters, however, limits their use to embedded devices based on very powerful processors.

Machine learning-based approaches increasingly enable adaptive sensor fusion optimisation through learned sensor relationships and environmental patterns. Neural networks and deep learning models can automatically perform multi-sensor algorithm tuning, though implementation in embedded devices requires a specialized hardware accelerator such as a neural processing unit (NPU).

These approaches excel in complex embedded sensor fusion scenarios in which traditional mathematical models are inadequate, enabling intelligent adaptation to varying operational conditions while maintaining real-time performance.

Optimisation techniques for sensor fusion algorithms

The practical application of sensor fusion algorithm optimisation can depend on the type of sensors of which a multi-sensor set-up is composed.

Sensor alignment

In a motion tracking system, for instance, the designer has to take care to align different sensor types such as accelerometers and gyroscopes, and also to transform their position measurements, rendered as co-ordinates, into a uniform format and scale.

Misaligned sensors require rotation matrices and translation corrections: these consume computational resources. Multi-sensor algorithm tuning must account for mounting tolerances, thermal expansion, and calibration drift to maintain accurate performance across a range of operating conditions.

Filter tuning

Filter tuning is an important technique for sensor fusion optimisation: an example is Q and R matrix adjustment in Kalman filters. Process noise co-variance (Q) and measurement noise co-variance (R) matrices require careful tuning to balance estimation accuracy against responsiveness.

In implementing sensor calibration techniques, the designer has the flexibility to adjust the size of the window selected for algorithm tuning. Larger windows improve multi-sensor algorithm tuning precision, but increase memory usage and processing latency.

Sensor weighting can also help improve the operation of a multi-sensor set-up, by prioritising inputs from a sensor or sensors that are known to be more accurate or reliable.

For the more resource-constrained embedded devices that use a cheap microcontroller, fixed-point arithmetic generates a smaller compute workload than floating-point arithmetic. But multi-sensor algorithm tuning with fixed-point arithmetic requires careful scaling and precision analysis.

Fixed-point arithmetic also provides deterministic execution times, an essential requirement of real-time applications, though sensor fusion algorithms must account for quantization effects and potential numerical instability during implementation.

Handling noise and uncertainty in multi-sensor systems

Any approach to sensor fusion optimisation has to take account of the real-world variances which such systems encounter.

The variances can include those present in the raw data, which can be pre-filtered with low-pass, moving average, or median filters.

Inherent variance in the outputs from a population of nominally identical sensors must also be estimated from empirical testing: the optimisation algorithm can then adjust for these variances dynamically.

Where a multi-sensor set-up produces a measurement output which fits the criteria for consideration as a corner case, the optimisation algorithm can use confidence scoring to decide dynamically whether outlier results should be rejected or accepted.

The multi-sensor system designer may also want to consider whether a combination of redundancy and consensus between sensors is warranted to maintain system operation in the event of loss of signal from a sensor or of sensor failure.

Validation and testing of fusion algorithms

Validating embedded sensor fusion systems requires comprehensive testing across operational environments and edge cases: some might not be apparent during development of the product in the laboratory.

The challenge lies in developing test methodologies that can accurately assess sensor fusion optimisation under real-world conditions while maintaining repeatability and traceability. Use of platforms such as MATLAB or Gazebo for initial prototyping enables the designer to simulate system operation at an early stage of development, and so eliminate impediments to sensor fusion optimisation before any commitment to firm decisions about hardware architecture and components.

System architects must implement built-in test capabilities that enable continuous monitoring of sensor fusion performance and automatic detection of degraded operation. These monitoring systems must operate within the same resource constraints on embedded real-time processing as the primary sensor fusion algorithms, while providing sufficient diagnostic information for system maintenance and optimization. Key values to measure include root mean squared error, latency, stability, and convergence time.

Failure mode analysis

Robust embedded sensor fusion systems must gracefully handle sensor failures, communication errors, and environmental extremes. This necessitates the design of sensor fusion algorithms which can detect and compensate for individual sensor failures while maintaining overall system functionality, and which can effectively perform noise handling in sensor fusion.

Multi-sensor algorithm tuning must account for these failure scenarios, implementing fallback modes and strategies for degraded operation that maintain critical functionality even when primary sensors become unavailable. This requires careful analysis of sensor interdependencies and the development of robust fault detection and isolation mechanisms.

Future trends in embedded sensor fusion

Like so much else in the field of embedded computing, sensor fusion optimisation looks set to be strongly affected by the rise of machine learning and artificial intelligence (AI).

For instance, it is likely that machine learning models implemented with neural networks could replace or complement the traditional filters used for signal conditioning in multi-sensor set-ups.

In addition, OEMs’ desire to implement AI at the edge and endpoint is engendering a shift towards more powerful microcontrollers and microprocessors with superior compute capacity to handle the larger code bases of AI algorithms. This increased compute capacity could also provide system designers with the scope to implement more compute-intensive sensor fusion optimisation algorithms, and thus to achieve higher accuracy at lower latency.

Event-based sensing

Another coming trend is the adoption of event-based sensing – processing data only when meaningful changes occur, rather than at fixed intervals. This reduces computational load and power consumption while maintaining responsiveness to important events. In sensor fusion systems, event-driven architectures improve efficiency and reduce processing overhead, though implementation complexity increases because sampling rates vary over time, and there is a requirement for sophisticated buffering and synchronization mechanisms.

Conclusion

Because of the increased capability of modern processors used in embedded systems, multi-sensor set-ups are becoming increasingly popular. Hand-in-hand with this is the wider implementation of techniques for sensor fusion optimisation. These techniques cover a broad range from sensor alignment and filter tuning, to methods for eliminating or compensating for noise and errors.

In the application of these techniques, embedded system designers will need to remain cognisant of the constraints imposed by typical embedded hardware architectures, and especially:

- Compute capacity

- Power consumption, particularly in battery-powered systems

As so often in embedded design engineering, successful designs manage the fine balance between performance, latency, and power.

To find out more about this and many other topics of interest to electronics design engineers, explore the Trending Tech section, find relevant development boards, and subscribe for product news and technology explainers on the ipXchange YouTube channel.

You must be signed in to post a comment.

Comments

No comments yet