Products

Solutions

Published

12 August 2025

Written by Elliott Lee-Hearn

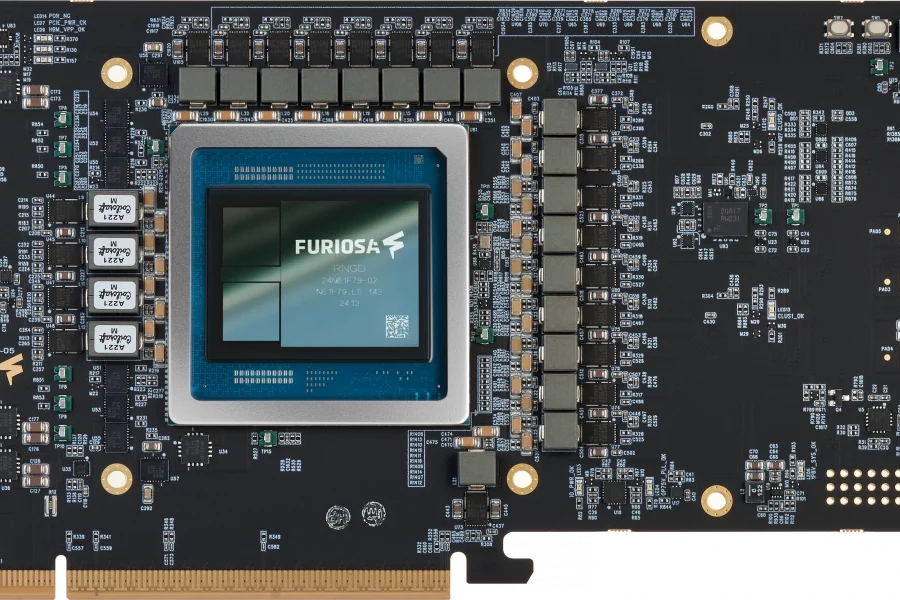

Furiosa AI, often referred to as “Korea’s answer to Nvidia”, is pushing AI inference efficiency to new levels with RNGD (pronounced ‘renegade’), its second-generation AI accelerator card. Based on a Tensor Contraction Processor (TCP) architecture rather than traditional matrix multiplication, RNDG delivers up to 512 teraflops per second while consuming under 180 W. This power efficiency enables data centre operators to cut cooling requirements and reduce operational costs — key factors for large-scale AI deployment.

The company’s first product, War Boy, was a vision accelerator optimised for YOLO models in object detection and segmentation. RNGD takes that foundation into the large language model (LLM) space, with native support for LLaMA 8B on a single card and LLaMA 70B across multiple cards. Furiosa’s PyTorch 2.0-compatible compiler allows developers to run their models without complex integration steps.

TCP architecture advantage

While most AI accelerators rely on matrix multiplication, Furiosa’s TCP abstracts computation at a higher level, enabling more matrices to be connected and processed as tensors directly. This approach improves both power efficiency and performance, making the tensor core the primary element of the inference algorithm. The RNDG PCIe Gen 5 card integrates a Furiosa ASIC built on TSMC process technology and 48 GB of HBM3 from SK hynix, offering 1.5 TB/s memory bandwidth alongside 256 MB of embedded SRAM.

By halving the power draw of comparable GPUs — typically around 350 W — RNGD not only reduces running costs but also eliminates the need for liquid cooling, relying instead on standard air cooling.

Partnership with Hosted.AI

To bring RNGD to market more broadly, Furiosa has partnered with Hosted.AI, a company providing turnkey software stacks for service providers. Hosted.AI’s platform enables more than 3,000 mid-to-large service providers worldwide to deploy “inference as a service” within 24 hours, without developing their own AI cloud infrastructure.

Narender, CEO of Hosted.AI, explains that most service providers lack the ability to compete with hyperscalers on AI services due to missing software integration. By combining Hosted.AI’s infrastructure virtualisation with Furiosa’s high tokens-per-second-per-watt-per-dollar hardware, providers can overprovision AI resources, increase utilisation, and achieve profitability in a market where GPU-based cloud services are facing shrinking margins.

Target applications

Furiosa and Hosted.AI see RNGD being deployed in data centres, edge data centres, and global inference processing networks, primarily for LLM inference workloads. Service providers can tailor these workloads to regional or customer-specific needs while benefiting from reduced power and cooling costs.

With AI model sizes and complexity continuing to grow, Furiosa’s Tensor Contraction Processor approach offers a compelling alternative to the dominant GPU architectures, delivering high performance with significantly lower operational overhead.

Comments are closed.

Comments

No comments yet