Register your interest

In the DeepX M.2 AI Accelerator

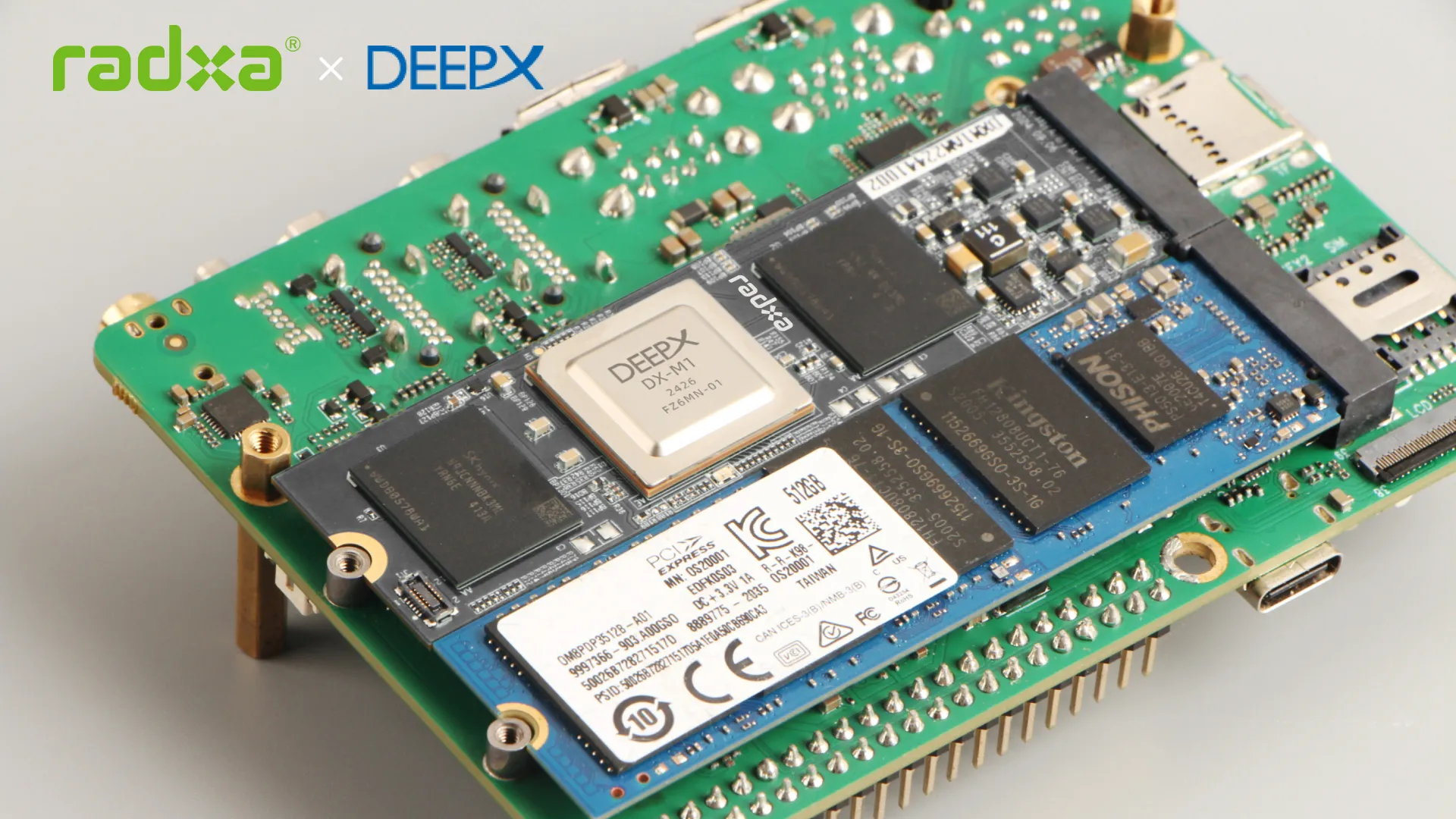

The DeepX M.2 AI Accelerator is a compact system-on-module that makes edge AI integration into embedded systems faster, easier, and more efficient. Introduced at Embedded World 2025, this plug-and-play module comes in a standard M.2 form factor, allowing engineers to slot AI capabilities directly into existing carrier boards with minimal development effort.

Unlike conventional SoMs built around general-purpose MCUs, the DeepX M.2 AI Accelerator is specifically engineered for machine learning workloads. It sidesteps the typical limitations of embedded platforms by delivering dedicated AI compute without requiring a complete system redesign. You can upgrade legacy hardware or supercharge new designs simply by dropping in the accelerator and connecting it to DeepX’s carrier board.

The carrier board itself is more than a breakout—it’s a ready-made development platform with all necessary interfaces and software support included. With DeepX’s SDK and sample models, users can deploy AI applications within minutes. A live demo at Embedded World showcased on-device face recognition for access control, demonstrating real-time performance in a fully self-contained setup.

For design engineers, the value is speed. System-on-modules exist to reduce prototyping time and lower the barrier to entry for complex system design. The DeepX M.2 AI Accelerator extends that benefit to AI development. No need to source and validate GPUs, no time spent building new boards, and no delays due to fragmented software stacks. Just plug in the module, run your models, and iterate.

DeepX also provides comprehensive SDK documentation, with tools for porting TensorFlow Lite and ONNX models onto the platform. Developers can fine-tune inference performance using DeepX’s runtime optimisations, targeting security cameras, smart access systems, and automation gateways.

Edge AI adoption often stalls at the integration phase—but DeepX eliminates that roadblock. The DeepX M.2 AI Accelerator delivers a complete, portable compute solution that’s ready for deployment in real-world applications. Engineers working on time-sensitive projects or resource-constrained environments will appreciate how fast this module can go from evaluation to production.

Whether you’re scaling up vision systems or enabling intelligence in constrained edge devices, DeepX offers a straightforward route to high-performance AI.

Register your interest

In the DeepX M.2 AI Accelerator